Key Takeaways

- Decentralized Cloud Marketplace: Akash provides a decentralized cloud service, connecting resource providers with users in a peer-to-peer marketplace.

- Cosmos SDK: Built on Cosmos SDK, ensuring interoperability and energy efficiency through Proof-of-Stake consensus.

- Cost-Effective GPU Rentals: Akash offers GPU and CPU resources at a fraction of traditional cloud computing costs, supporting AI and high-performance applications.

- Permissionless and Secure: Enables censorship-resistant app deployment with no data lock-ins.

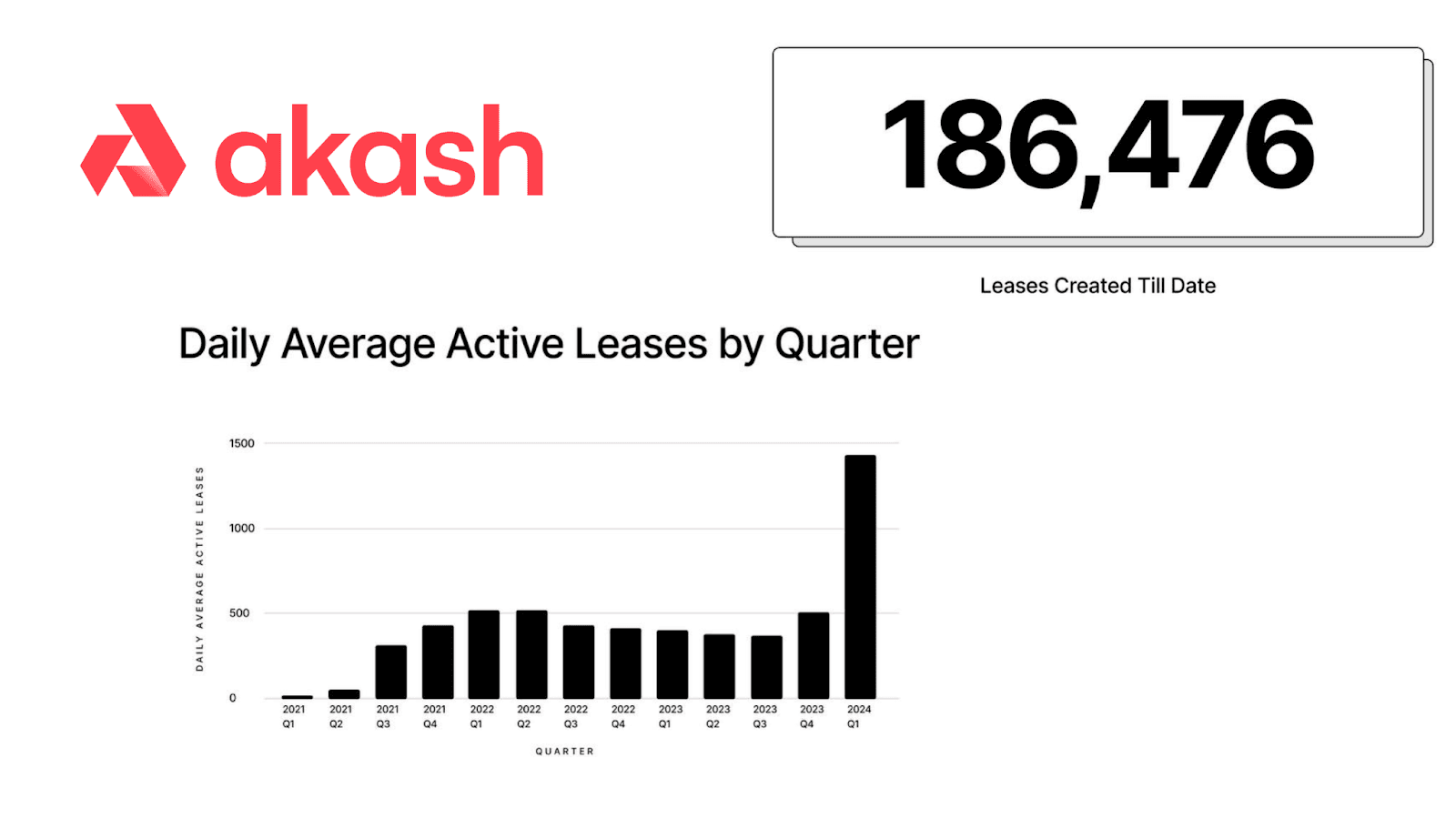

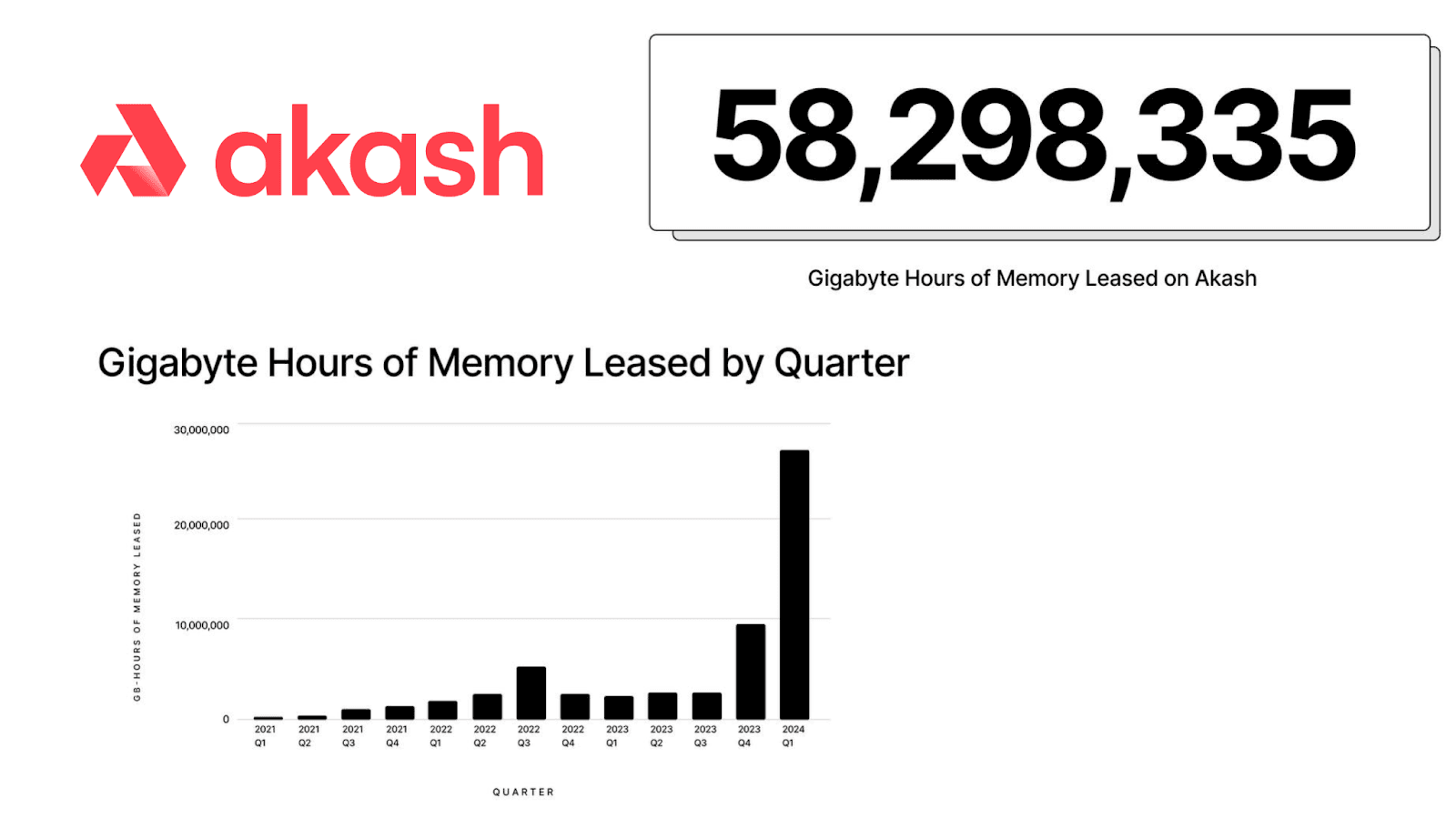

- Global Reach: Supports over 58 million GB hours of memory leased globally.

Present Day Cloud Computing and Data Challenges

In our massively interconnected world we are critically reliant on efficient and constantly available online computational effort to facilitate the operation and secure use of various data types that allow all aspects of our society to operate in a cohesive and organized manner.

This includes the provision of numerous utilities within a large range of sectors encompassing the financial, entertainment, sciences, tech, manufacturing, and communication industries and others; as well as those related to corporate and institutional services such as fixed infrastructure monitoring and management, research, administration, and more.

These can be data processes that enable the connectivity of Internet of Things (IoT) devices, calculations that help initiate the step-by-step undertakings needed to manage global supply chain processes, those related to a wide-range of medical and science applications, and for various modeling utilities through artificial intelligence (AI) and other related technologies.

Regardless, what is commonly referred to as “cloud services” are leveraged within a vast plethora of interconnected industries the world over to address a common barrier to entry for businesses or individuals: access to hardware and infrastructure. In many respects, cloud service is a nebulous term that encompasses a wide variety of actual services, but shares the common trait that the service is hosted and administered remotely from a deployer (a system responsible for setting up, configuring, testing, and deploying a system or software).

As one the world’s largest cloud services infrastructure providers, Google defines cloud computing as follows: “Cloud computing is the on-demand availability of computing resources (such as storage and infrastructure), as services over the internet. It eliminates the need for individuals and businesses to self-manage physical resources themselves, and only pay for what they use.”

In general, cloud-based services encompass various applications, including:

- Data computing / Software as a Service (SaaS)

- Databases and data storage

- Internet of Things (IoT)

- A.I. and Machine Learning

- Developer tooling

- Communication services

Today, transactions are generally transmitted across publicly accessible networking systems over TCP/IP (the internet's main data transmission protocols) and requests are often not handled in-house but at large data centers or through mega-conglomerate cloud service providers such as Microsoft Azure, Google Cloud Platform (GCP), Hetzner, or Amazon Web Services (AWS). Unfortunately, the fact of the matter is that this design represents a centralized approach to data center and cloud serviceability.

No matter their intended uses, present day cloud service providers are susceptible to many challenges. In particular, these include:

- Centralization and permissioned servicing

- Lack of accessibility and limited resource availability

- Security challenges and data lock-ins

- Ease of use, limited barrier to entry, and high costs

Let’s expand on each of these below:

Centralization and Permissioned Servicing

Centralization is a critical challenge that users of cloud service providers face. One of the ways centralization can affect those using cloud-hosting environments is via changes in service provisions that can be modified by a dishonest operator, meaning a specific class of use cases may be disallowed at a moment's notice by a cloud service operator at their discretion.

A prominent example of this occurring is when cloud service provider Hetzner opted to amend their terms of service and disallow crypto and blockchain related systems to operate on its infrastructure. At the time, they were the second largest cloud service host for Ethereum, meaning this change led to noticeable service disruptions.

Denying clients the right to access cloud services through permissioned (centralized) providers is a grave concern. This issue has been discussed at length by Akash founder Greg Osuri on multiple occasions including in this interview. To put this concept into perspective, in the interview he mentions that 15% of Ethereum nodes and as many as 42% of Solana nodes were indeed running on Hetzner infrastructure, representing a centralized approach to node-resource allocation.

Lack of Accessibility and Limited Resource Availability

There are two main approaches that ensure online computational resources are available when needed.

The first approach is owning the necessary hardware independently, which ultimately requires capital investment and somewhere to house it and maintain it long-term. This could entail co-locating server hardware at a data center with all necessary power sources, cooling systems, physical security, technicians, and network infrastructure provided as a service.

The second approach is to entirely bypass the investment in hardware and subscribe to a cloud service that offers resources at an hourly rate. The faster alternative is often to go with a cloud deployment service via a service provider such as AWS, Google, or similar. This approach can be very attractive for smaller businesses and individuals requiring computational services or data servicing.

That said, accessibility issues can be challenging when it comes to large service providers (think Amazon’s AWS, Microsoft Azure, and others). In the event large portions of a cloud service provider’s hardware infrastructure system fails or goes offline (issue with nodes, the back-end etc.), disruptions could last for days or even weeks at a time.

Unfortunately, due to the possibility of such instances, it can be much harder to engineer contingencies and failover systems if the primary provider goes offline. What's more, it is also extremely challenging from a user-perspective when a provider decides a certain customer or class of customers can no longer be served due to lock-ins such as, for example, proprietary interfaces which are indeed commonly used within virtualization solutions and incompatibility of logical instances between competing providers.

Finally, some cloud resources may at times be harder to access than others. Examples of this can include limitations in GPU processing times, which can lead to a wide-range of challenges. These can include longer lead times, higher costs, or the presence of unethical provider arrangements, or the possibility that resources become unavailable at a moment’s notice.

Security Challenges and Data Lock-Ins

Security and confidentiality are critically important to cloud computing systems.

In our online world, many transactions, be they a payment, committing a computational task, or a request for information, are in fact initiated and completed entirely online without any fixed point that acts as a verification layer.

Therefore, the need for strong and trusted cryptographic security has continued to become more prominent. In this context, it is imperative to establish complete trust in the transactional chain from initiator to service provider, despite zero trust existing in interconnected communication channels.

Especially for security critical applications, a customer may take issue if the service provider monitors activities any deeper than just for service relevant administrative purposes.

At times, it is commonplace for service providers to lock their customers (i.e., using a data lock-in) into their own independent ecosystems, making it extremely difficult to fully integrate with or make use of competing external cloud providers or separately owned hardware systems. When this does occur, it often means that the required costs to actually import or export large amounts of data between providers (i.e., ingress/egress costs) can be significant.

Ease of Use, Limited Barrier to Entry and High Costs

On occasion, Software as a Service (SaaS) companies have been known to expend at least 50% of their revenue on cloud services, a practice that ultimately comes at the expense of profitability. In addition, these costs can also extend to blockchain systems, which can result in the number of network validators being restricted, resulting in limited decentralization. (i.e., the fewer the number of validators within a network, the more centralized it becomes).

In some instances, opting for a cloud solution can lower the threshold needed to ensure an application continues to be operational, although users often pay a premium for ease of accessibility. Nevertheless, both owned infrastructure and cloud service infrastructure models are used for more specialized tasks in addition to general services such as email, databases, video streaming, and websites on top of pure computational tasks.

Despite the convenience of the increasingly popular cloud model, the hourly cost for cloud services is significant and can represent a potential weak point because of the fact that service providers can sometimes experience frequent outages, perhaps due to internal misconfigurations during system maintenance.

The cost for hosting a service with a cloud provider is significantly higher than running that same service on your own hardware. However, as it relates to selecting the cloud service provider option, the upfront investment in hardware is not required. Therefore, for a quick deployment, this may be a more attractive alternative.

Alternatively, self-hosting typically encompasses a substantial upfront investment in servers and data center space as well as installation, storage space, and maintenance is generally required. In addition, if a deployment is solely based on private hardware availability, it is also important to plan for the possibility of service disruptions and eventual expansion of core hardware infrastructure (i.e., housing more hardware units).

Especially if owned hardware is located at a single site, the real possibility exists for the potentiality of site disruptions. These can include extended power outages, network disruption, and hardware malfunction. If hardware redundancy is required to ensure service, ideally this redundancy should be located at a separate site with the same up-front and operational costs as a way to preserve capital.

Akash Network: A Decentralized Cloud Marketplace for All

Now that we have touched on the many challenges that the current cloud computing landscape is susceptible to, let’s introduce you to Akash Network and provide an explanation of how Akash solves these issues.

In general, the Akash Network is a Cosmos SDK-enabled Layer 1 blockchain built on Comet BFT designed to provide the necessary architecture needed to create cloud computing marketplace infrastructure.

More specifically, Akash is a Decentralized Physical Infrastructure Network (DePIN) built to provide decentralized cloud services through a system that enables a peer-to-peer (P2P) marketplace for computing resources at competitive prices. In essence, Akash is a centralized marketplace for unused computer resources that can be leased by a customer, or “tenant” in Akash parlance. At times, Akash compares their model to an Airbnb for computing resources because of its synergistic relationship between providers and tenants (more on this later).

Because Akash is built using the Cosmos SDK, it is designed to be blockchain-agnostic, meaning it is fully interoperable with all blockchains built atop the larger Cosmos ecosystem (think Celestia, Axelar, Noble, Neutron, Injective, Cosmos itself, and many others). This cross-chain connectivity is critically important to ensure the continued adoption and serviceability of the greater Akash Network.

In many respects, Akash is designed to be significantly more eco-friendly compared to its centralized alternatives, particularly because it is built using an energy-efficient dPoS consensus model, and the fact the model has the potential to enable the full capacity of existing server hardware even if its owners don’t utilize its full capacity for their own operations.

Additionally, Akash is built to guarantee data privacy, payment transparency, and immutability from centralized control, while also being able to democratize secure censorship-resistant app deployment.

On Akash, when fielding an application, the configuration and requested resources are defined in a structured file format. The infrastructure is abstracted in a declarative language called a Stack Definition Language (SDL). For those unfamiliar, SDL is compatible with the YAML standard (a human-readable data serialization language used for writing configuration files), making adoption easy. Of importance, SDL configuration files employing the YAML compatibility standard must employ the file endings ‘.yaml’/’.yml’.

The above file format bears a strong resemblance to Docker Compose files (a tool for defining and running multi-containerized applications). More information detailing the specificities that make Docker containers a key component of the Akash Network ecosystem can be found in the section below that discusses the relationship between Providers and Tenants.

According to the Akash documentation, to fully define a deployment, an SDL file should include the following sections that cover the necessary information required to deploy and set up the application and facilitate proper accounting:

- version

- services

- profiles

- deployment

- persistent storage

- gpu support

- stable payment

- shared memory (shm)

- private container registry support

The above illustration is a good example of Infrastructure as Code (IaC). By providing the full declaration of requirements, the deployment becomes fully portable and can be migrated seamlessly between providers and within regions with full control over resources, pricing, and billing.

Two instances related to the above concept that are worth mentioning in more detail are persistent storage and networking, of which only the former is a required field.

Persistent storage defines what amount of storage (currently limited to a single volume, locally available, i..e, shared volumes between services are not available) is required and billed for. It should also be noted that there is no cap on storage other than the provider's physical and administrative limitations.

However, there are a few things a tenant will need to keep in mind: The volume content cannot be migrated when redeploying on a different provider, and the persistence is limited to the duration of the lease. Upon provider migration or lease expiry, the data will be irretrievably lost.

The non-required networking field defines what (external) networking requirements an application makes use of. Akash Network offers the option of requesting dedicated public (routable) IPv4 assignments as a paid extra. All addressable ports (i.e., 1-65535) are made available to the tenant. This allows for transparent deployment of publicly available services such as web, DNS, VPN, etc.

Akash refers to itself as a “Supercloud” because it can be considered a “cloud of clouds”.

At this current juncture, it can be difficult to gain access to the most cutting-edge GPUs for computational use. Often, lead times and high prices for procuring hardware can be prohibitive due to limited supply where a solution needed to address this challenge is required.

The supercloud’s permissionless and transparent accessibility to compute resources (including GPU time on a range of hardware configurations) addresses this challenge, with providers ranging from individual instances, to hyperscalar.

This model is well suited for workloads that make heavy use of GPU computing. Especially workloads that are intermittent or batch-like. AI applications such as those required for the training of large language models (LLM) are an important example of tasks suitable for on-demand resources like the ones made available through the Akash Network.

Furthermore, Akash democratizes the access to premium GPU time by providing accessibility to NVIDIA H100, A100, and A6000 GPUs, among others; which can be at times difficult to obtain on centralized cloud providers because of high demand. On the Akash Network, such resources are offered through the reverse auctions and anyone can bid to acquire the appropriate time.

The Advantages of Akash Network

As a decentralized cloud service provider, Akash allows for the synergistic connectivity of parties with excess resources (providers) to those seeking resources (tenants).

This model allows providers to earn a new revenue source, while simultaneously benefiting consumers. In this way, bids for cloud services are automatically matched with offers in a manner that allows the AKT token to be incorporated into all-important network processes such as staking, governance, transactions, and incentivization.

This is noteworthy because of the fact that global data centers typically leverage most of their computing resources, meaning that those seeking resources may face a host of challenges when interacting with centralized firms.

By leveraging Akash, any type of cloud-native application is easily deployable without requiring it to be written in a new language or to be susceptible to restrictions such as vendor lock-ins. By employing a reverse auction pricing model, developers that deploy applications atop Akash set the terms of their deployment that are then accepted in the event a winning bid is chosen.

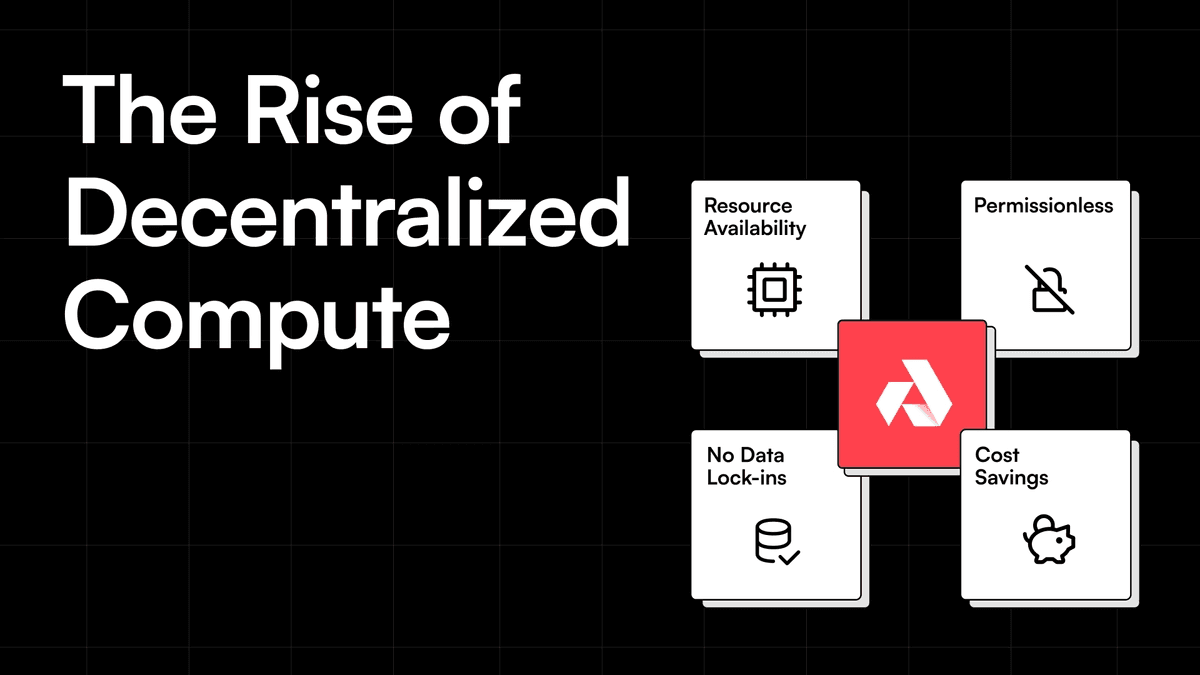

This model exhibits several important advantages, including:

- Permissionless: Akash is designed to be permissionless in nature, meaning that anyone who has the need to access computing resources is able to. As a representation of permissioned cloud services, as of July 2024, ~65% of Ethereum nodes leverage AWS, Hetzner, OVH, or Google infrastructure, representing a plethora of risks should these entities change their conditions and policies. As an example, in 2022, Hetzner mandated anti-crypto policies that further reduced accessibility to cloud services.

- Resource availability: Because of the fact that Akash is open-source in nature, computing resources of any type, in any geographical location can be shared. In general, this is not the case with centralized providers who may not possess various computing resource types, or provide support in some areas.

- Cost: On Akash, prices for cloud computing services are up to 85% lower than their centralized counterparts.

- No data lock-ins: By using Akash, users are able to mix and match who they lease resources from. In addition, platform users are also able to remove their all-important data at any time should they choose to.

Given that it is common for large enterprises to spend up to 50% of their revenue on public cloud hosting, the Akash cost-factor itself is a sufficient consideration when assessing its viability as a cloud provider.

That said, the service is also designed to be permissionless in nature, adaptable with extensive resource availability, and no-data lock-ins, making it a markedly improved model compared to centralized alternatives, meaning the possibility exists that the network could gain continued traction moving forward.

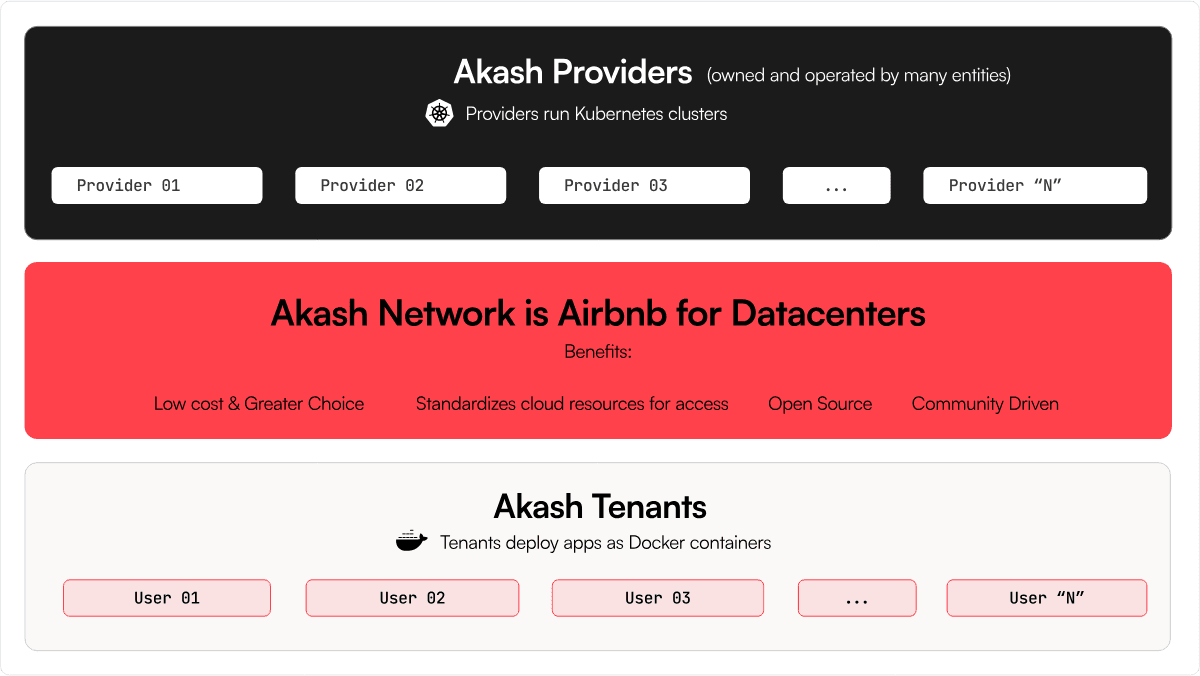

The Relationship Between Akash Providers and Tenants

In general, Akash Network specifically distinguishes between two main user types:

- Providers: Users who offer computational resources (they deploy applications)

- Tenants: Users who purchase resources similarly to a cloud service (they host applications)

The Akash marketplace makes use of what are known as deployments which are used to characterize a resource that a tenant is requesting from the network. More specifically, deployments contain groups (or a grouping of resources) that are meant to be listed together via a single provider.

Akash providers are entities that contribute computing resources to the Akash Network. They can be individuals or organizations with underutilized computing resources such as data centers or personal servers. Providers participate in the network by running the Akash node software and setting the price for their services. Users can then choose a provider based on factors such as cost, performance, and location.

For the most part, providers offer their resources in Kubernetes clusters, logical demarcations that hold data services in “containers” isolated from the physical hardware the code runs on. Kubernetes is an enterprise grade open-source solution to compartmentalization of computing resources that has become a prominent de-facto standard used by many major organizations including IBM, OpenAI, Spotify, and many more.

Tenants in turn submit their deployments as Docker containers. Containers are self-contained instances containing all the code and data required for a functional instance of the service deployed, and are therefore portable and largely system-agnostic.

This model allows for fast deployment in a standardized environment that is common between Akash providers and largely compatible with other Kubernetes instances, making it easy to migrate a service between providers and even self-hosted solutions.

To learn more about the relationship between providers and tenants and the entirety of Akash’s underpinning infrastructure, consider reading our second blog post within our Akash Network series.

Benefits of Akash GPU Rentals for the Proliferation of AI

Akash provides a marketplace where tenants can pay by the hour for CPU and GPU computational resources offered by providers. In this model, tenants are able to choose the appropriate number of CPU cores needed, the amount of memory and storage space required, and what type of GPU is required (or if a GPU is required at all).

For many tasks, the versatility of a CPU is perfectly adequate, but they often exhibit limited parallel processing capacity. GPUs on the other hand are specifically designed for parallelization, meaning for massively parallelizable pure computational tasks, GPUs are often considered unmatched. This is especially important because of the fact that increasingly important AI and Deep Learning training applications massively benefit from the characteristics that GPUs provide.

By utilizing Akash’s GPU-CPU service accessibility, prices for these services are typically as low as one-fifth the cost of traditional cloud storage and computing offerings.

The Akash marketplace is designed to massively benefit AI hosting through its GPU rental providership. This is especially true for AI developers and researchers who have a high demand for GPU serviceability. To tailor to these customer types, Akash GPU rentals provide a solution that exhibits the following characteristics:

- Cost effective unlike traditional cloud platforms, Akash is designed to drive down the cost of cloud deployment and especially GPU rentals for AI processing

- Scalable and performant - allowing users to scale their operations and lower costs for AI training models

- Democratized AI accessibility - empowers individuals to participate in AI development and hosting, increasing innovation and technological advancement

- Secure and private - built for AI application confidentiality and security without the susceptibility to vulnerabilities that centralized systems face

- Eco-friendly resource utilization - by providing unbounded accessibility to idle GPU resources, Akash dramatically minimizes environmental impacts of similar centralized services

- Global accessibility - provides GPU accessibility to researchers and AI developers at geographical locations globally instead of in independent concentrated regions

By establishing a cost-effective, secure, and readily available alternative for AI hosting via its GPU marketplace, Akash Network is not only revolutionizing cloud computing, but also making a significant impact on the continuously-evolving field of artificial intelligence.

As of July 2024, Akash Network offers around 4400 CPUs and 360 GPUs on mainnet, with around 880 active CPU leases and approximately 80 GPU leases held by various clients. Some of these leases are likely to be long-term as evidenced by the deployment by several actors such as the medical data handling company Solve Care and several more creative applications.

Akash History and Founding

The parent entity behind the development of Akash Network, Overclock Labs, was founded by CEO Greg Osuri and CTO Adam Bozanich in June 2015.

In 2014, Overclock Labs initiated the development of the very first version of the Akash Network. Then in November 2017, the first version of the Akash technical paper was released and after several testnets and devnets, its mainnet launched for the first time in September 2020. Finally in March 2021, the first mainnet version employing the marketplace format that Akash is known for today launched.

Over the last two decades Akash co-founder Greg Osuri has worked tirelessly to transform the cloud computing landscape. Prior to co-founding Akash, Greg developed an extensive background as an engineer, technical architect, and entrepreneur working initially with IBM and later founding one of the world’s largest hackathon organizations, AngelHack. Osuri also is credited for designing Kaiser Permanente’s first cloud architecture among other initiatives.

Greg is a frequent speaker on blockchain and cloud computing and was instrumental in passing California's first blockchain law and even providing the first expert-witness blockchain testimony at the U.S. Senate. Osuri was also involved in the founding of Firebase, a software company which was eventually acquired by Google, Gridbag and SBILabs Corp.

Akash co-founder and CTO Adam Bozanich has a background as a software architect and consultant, having spent time at Symantec, Xoopit, Mu Dynamics, and various additional entities in a plethora of software engineering roles since 2005. Overall, Bozanich possesses vast experience with a side range of technology and computing enterprises in a vast range of engineering disciplines.

Other prominent members of Overclock Labs include CFO Cheng Wang, Head of Community Adam Wozney, Head of Marketing Zach Horn, Director of Support Scott Carruthers, Managing Director Tyler Wright, Director of Finance Scott Hewiston, VP of Product, Engineering & Partnerships Anil Murty, Site Reliability Engineer Steve Acerman, Sr. Staff Software Engineer Andrew Hare, and several others.

Advisors to the project include Cosmos OGs responsible for the development of the Cosmos blockchain, Tendermint consensus, the Inter-Blockchain Communication (IBC) Protocol, and related infrastructure. Specifically, these include former Director of Product at Tendermint Labs Jack Zampolin, the founder of Osmosis DEX Sunny Aggarwal, Kava co-founders Brian Kerr and Scott Stuart, and many more.

In an undisclosed funding round in March 2020, Akash raised 2 million through leading venture capital firms Digital Asset Capital Management (DACM), Infinite Capital, Satori Research, and Cypher Capital, with additional contributions from numerous angel investors. A further $800k was secured in October 2020 via an Initial Exchange Offering (IEO) on the Bitmax exchange (now called Ascendex).

Akash Network Use Cases

Outside of its cloud computing and decentralized cloud computing storage service offerings, Akash Network is meant to fulfill a variety of real-world uses. Some of these include providing compute data and utility accessibility via the rental of GPUs and CPUs through the Akash Network marketplace, inference and model training for open source machine learning and AI applications, and Ray Cluster deployment and operation for ML.

Many companies with a core focus on computational tasks such as AI training and services based on blockchain that reach a healthy size will require significant resources. Once the initial growth phase in this niche is passed however, it often makes sense to take the step towards investing in dedicated hardware.

Akash Network provides an excellent incentive to carry out this process, even if hardware seemingly could be considered underutilized, the potential exists to earn rent by filling up the unused capacity. Owners of hardware can therefore potentially fully utilize Akash’s capabilities, and should the need arise, providers are able to expand their own deployment by sourcing more capacity from other peers within the network. On Akash, GPU workloads are supported via a Proof-of-Work (PoW) style consensus.

Not only is this a convenient way for a startup, small enterprise, or even an academic researcher with massive data sets and limited processing power to meet their computational needs, it lets existing hardware owned by various entities be fully utilized. In addition, it represents a potential solution to the extreme energy efficiency challenges that centralized cloud entities are susceptible to.

Akash Network makes use of two distinct applications where user-application connectivity is realized through the platform. These include the:

- Praetor App: For providers with hardware resources intending to offer access to these services through the Akash Network, the Praetor App is available. Praetor provides a simplified user interface that facilitates the administration and interaction with the Akash Network and its hardware; therefore negating the need for manual configuration from the command line.

- Akash Console: For tenants, the Akash Console is provided to administer the deployment of applications on the Akash Network that possess advanced capabilities. This is typically realized via the deployment of a Docker container (i.e., software in packages) which can be deployed on the Akash console with a few simple clicks.

DeFi apps that are deployable on Akash include Uniswap, Balancer, Synthetix, Yearn Finance, Serum DEX, PancakeSwap, SushiSwap, Uma Protocol, Thorchain BEPSwap, Curve Finance, and others.

Additional applications that are deployable on Akash include those for blogging, gaming, database and administration, hosting, continuous integration, project management, tooling, wallets, Cosmos SDK development, media, data visualization, and others.

Akash Network also offers a strong foundational platform that is able to provide a wide range of user-specific cloud and AI service offerings. Some of these include:

- Solve Care (patient data ownership management): Solve Care is a system designed to uphold the persistence of data beyond the retention time set by care providers and regulations, while simultaneously tying the ownership of patient data to the intended patient. Once this process is initiated, the patient is then able to bring the correct data to their next physician appointment for further analysis. In essence, the Solve Care platform makes use of blockchain to manage various critical aspects of the treatment cycle including: patient identity, medical and diagnostic data, patient consent, along with various transaction and payment types. This serviceability dramatically reduces the cost of delivering explanation of benefits (EOB) documentation in insurance claiming and processing procedures.

- Thumper AI (personalized generative AI): Thumper AI is a platform that provisions accessibility to more simplified and more efficient visual-artistic generative AI services. The system is primarily used for artistic AI training and processing as a means to simplify workload and increase artistic creation efficiency. Thumper is aimed at creatives of all types and allows users to train models based on their previous work, while also leveraging various AI models such as: LoRa, Stable Diffusion, and more. The system leverages a pay-per-use model and also offers time-based subscription packages.

- Presearch (decentralized search engine): Presearch is a decentralized search engine powered by blockchain. Its main premise is to offer a system that allows users to deploy their own nodes as a means to expand the search engine's capabilities. The Presearch system is only limited by the amount of providers available and is geared toward providing a solution to the search engine data storage and consumption inefficiencies of Web3. In time, the Presearch service is expected to grow to match the serviceability necessary to operate on commercially dedicated cloud providers.

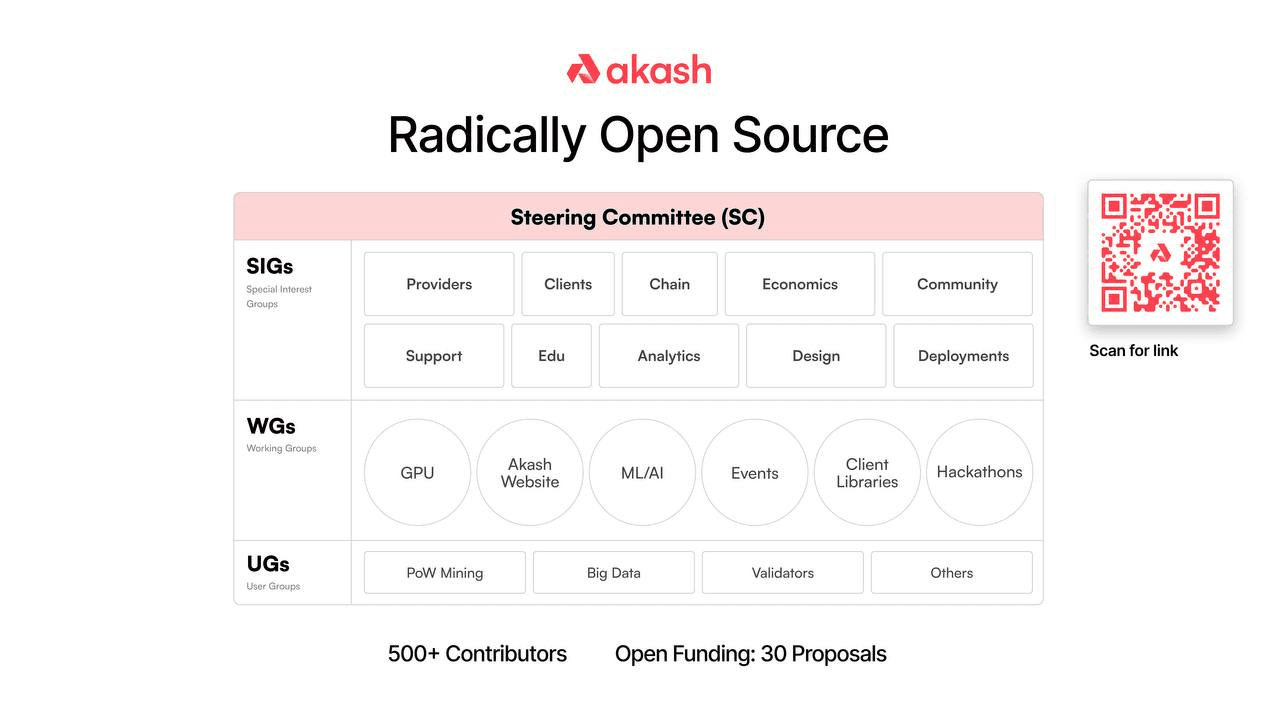

Akash Network Community Groups

The steering committee is a special Special Interest Group (SIG) that periodically evaluates the list of projects that are being carried out by the global Akash community (of over 500+ global contributors) by prioritizing, adding, or removing them if deemed necessary. Overall, however, the development of Akash is structured into three distinct Community Groups (CGs):

1.) Special Interest Groups (SIGs)

2.) Working Groups (WGs)

3.) User Groups (UGs)

Each of these groups is classified in a way (as either an SIG, WG, or UG) that allows global community members (whether they be developers outside of the Akash team, those hosting Akash Hackathons, or those responsible for hosting providers, tenants, or validator nodes on the network etc.) to contribute to the development of the Akash Network.

This structure is designed to help ensure that the right things are focused on as the development of the platform continues. The idea is for these tasks and decisions to be made in a manner that is fully decentralized with no single entity capable of changing the governance, protocol development, or any other parameters of the platform’s evolution without the input of the larger community.

Akash Network Tokenomics

To ensure the utility and operational efficiency of the larger Akash Network, the ecosystem and its underlying protocol leverages the use of a native token called AKT. More specifically, AKT possesses the following utilities:

- Security: Akash harnesses a delegated-Proof-of-Stake (dPoS) security model and AKT is used to help secure the network through staking. In essence, staking AKT allows stakers to earn a passive income, while simultaneously increasing overall network security robustness.

- Governance: Akash Network is a community-owned and self-governed platform, meaning that all aspects (including the ability to propose future changes) of the network are governed by AKT holders.

- Value exchange: AKT enables a default mechanism for the storage and exchange of value, while also acting as reserve currency within Cosmos’ multi-currency and multi-chain ecosystem.

- Incentivization: AKT acts as a medium to provide incentivization for users on the network (i.e., for both providers and tenants), while simultaneously acting as an investment vehicle and an incentivization mechanism for network stakers.

Upon initial mainnet launch in September 2020, the initial token allocations for the Akash Network's AKT token, were as follows:

- 34.5% - Investors

- 27% - Team & advisors

- 19.7% - Treasury & Foundation

- 8% - Ecosystem and awards & grants

- 5% - Testnet incentivization

- 4% - Dedicated for marketing, PR, and vendors

- 1.8% - Public Sale allocation

As of July 2024, AKT has a total supply of 247.72 million with a circulating supply of 247.72 million (with all tokens being unlocked) and a maximum supply of 388.54 million. Initially, the Akash team chose to create a gradual token release schedule that over a 10-year period gradually increased AKTs maximum supply from 100 million in September 2020, to 344.13 million by September 2030.

Upon Akash Network’s September 2020 mainnet 1.0 release, 100 million tokens were allocated towards vesting for different groups that had initially contributed to the early development of the Akash Network.

By November 2023, just over three years after initial launch, the 100 million initially vested AKT tokens that had been reserved for team members, the Akash Network Foundation, early investors, and the like were fully distributed, meaning there would be no upcoming token unlocks moving forward.

Recently, the Akash project tabled a proposal called Akash Economics 2.0 to act as a starting point to improve the Akash Network’s economic system. In particular, Akash Economics 2.0 is overseen by an entity called the Economics Special Interest Group (SIG) made up of several early members of the Akash team (consisting of both founders and CFO Cheng Wang).

In essence, the goal of the SIG is to ensure that the AKT utility token is used in a wider range of ways that helps incentivize the long-term trajectory and growth of the greater Akash Network. Specifically, the SIG helps incentivize community initiatives, engineering and protocol development, and security budgeting for the project.

The Akash Economics 2.0 proposal was created to act as a starting point to help solve some of the challenges that had limited the initial version of Akash economics, including:

- Initially the Akash Network requires AKT to pay for hosting where the price is determined through an agreement between the provider and the tenant at lease initiation. This can present challenges if a workload such as a website is required to run for a significant amount of time because AKT prices can fluctuate significantly throughout the agreement duration, resulting in the tenant or the provider paying a significant amount more, or a significant amount less, than what was initially agreed to.

- The Akash Network is in its infancy with early product-market fit encompassing a growing demand that is not yet sufficient enough for providers to commit substantial amounts of computing to the network.

- The community found it not yet large enough to incentivize the continued growth of the Akash Network.

- The AKT token must leverage the ability to accrue value beyond incentives to ensure the continued security of the network as it grows.

To solve these issues and kickstart continued network growth, Akash Economics 2.0 tabled five main initiatives, of which the two have already been accepted:

- Take and Make Fees

- Stable Payment and Settlement

That said, three additional features are still pending as of July 2024, including:

- Incentive Distribution Pool

- Provider Subsidies

- Public Goods Fund

Resources

The information provided by DAIC, including but not limited to research, analysis, data, or other content, is offered solely for informational purposes and does not constitute investment advice, financial advice, trading advice, or any other type of advice. DAIC does not recommend the purchase, sale, or holding of any cryptocurrency or other investment.