Key Takeaways

- Decentralized AI: Allora leverages a distributed AI framework, enabling context-aware inference generation through decentralized participants.

- Cosmos-based infrastructure: Built on Cosmos, Allora harnesses CometBFT consensus to achieve secure and permissionless AI processing.

- Participant roles: Allora's key participant roles include Workers, Reputers, and Validators, all of which contribute to AI generation, inference and modeling accuracy, and network security.

- Layered architecture: The Allora Network is composed of three layers (Inference, Forecasting & Synthesis, and Consensus) that help structure the protocol’s AI processing workflow.

- Pay-what-you-want model: On Allora, consumers are able to choose the price they pay for inference services, ultimately incentivizing participation and network growth.

Allora – Decentralized Artificial Intelligence Explained

Artificial Intelligence (AI) has become a highly sought after commodity, allowing for context-aware analysis of many complex subjects in a similar manner to human reasoning. It is however heavily reliant on processing power, especially during the initial training phase. As an AI model has been adequately trained, it depends on large volume data sets which take up storage space and need to be quickly accessible in order to process any queries.

When discussing the modern concept of AI it should be noted that the technologies behind it have been made possible with the exceptional growth of access to computing power and computer memory. Arriving at the computational capabilities of today, we are in a much better position than ever to have adaptive algorithms learn from a large set of data points as well as relying on black boxes (i.e. known responses due to certain inputs without knowing the exact mechanisms behind the responses).

For an AI to best utilize this network of knowledge, of known statistical models and empirical experiences to produce a best-effort response, the better the interconnectivity of all knowledge is, the better the result will be, to the point of mimicking reasoning and intelligence.

Allora’s goal is to commoditize intelligence by leveraging a massively interconnected network of knowledge and experience and improve the accessibility to AI generated inferences that are self-improving by having models sharing knowledge in a collaborative manner and creating a synthesis of the final response.

Allora achieves this goal via its novel context-aware, self-improving inference generation, uniquely differentiated incentive structures for each actor in the network, and by harnessing the strength of a Cosmos SDK-enabled Proof-of-Stake (PoS) blockchain employing CometBFT consensus. The lack of a centralized controlling body that could impose biases lets the Allora Network’s distributed intelligence quality evolve organically.

To learn more about the founding of Allora and what makes it important in the continued evolution of AI, consider reading our introductory blog post in this series.

Understanding Allora Network’s Technical Architecture

Allora firmly believes that the quality of an AI’s output requires context-awareness. To produce the best inferences, Allora’s aggregated network level inferences draw conclusions based on static models for raw data, their previous performance, and forecasted performance under differing market conditions.

In contrast to most AI companies active today, Allora has adopted a collaborative and decentralized approach without central oversight. This decentralized nature further removes the risk of corporate bias influencing AI algorithms. A centralized AI that is not under the control of the end user will by design act like a black box with little transparency as to how the AI reaches its conclusions. On the other hand, Allora works by breaking down a query into AI primitives and relying on the aggregate answer from a network of replies to the query. Consequently, a much higher degree of transparency into the AI’s reasoning is achieved.

To understand Allora’s decentralized artificial intelligence design, it is important to be aware of a few key concepts:

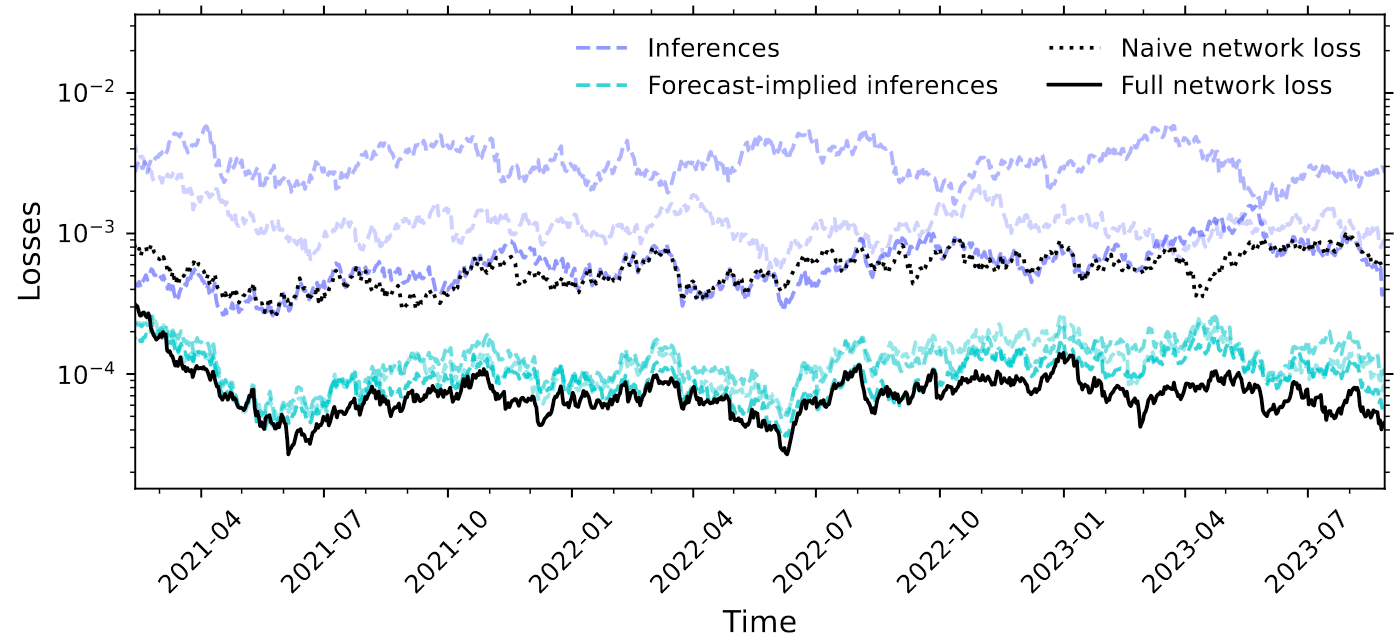

Inference: An inference is the end product of an AI – it is an “educated guess” for the values of a limited set of output variables based on a limited set of input variables. The AI model uses its initial and “on-the-job” training to generate an optimized output – the inference. The network differentiates between a “naïve” inference, i.e. one based solely on context-less raw data, without taking into account the combined knowledge of the network, and aggregated inferences that takes into account forecasts and reputation weights assigned after multiple rounds of inference generation. The concepts of Ground Truth and reputation are at the core of the functioning of Reputers, which we’ll discuss later.

Topics: To compartmentalize and organize tasks Allora employs the concept of Topics. Topics are organized as sub-networks which are targeted at a limited scope problem, each with its own target variable or variables and loss functions. To govern a Topic sub-network, a set of rules are laid down which are referred to as the Topic Coordinator.

Loss Function: Losses are a value that describes how accurate an inference is within a given context. The losses are forecast by dedicated participants, Reputers (see also below) on the network and the system seeks to minimize this value (a lower value equates to higher accuracy). As this value is directly tied to the context, this is at the core of making Allora’s AI context-aware.

Regret: On Allora, Regret is a calculated measure of whether a calculated inference is better or worse than previously calculated inferences. The regret is used to assign weights to individual inferences. The weights are subsequently used in the final synthesis of the answer that is passed on to the consumer.

Ground Truth: Allora refers to a real world value sampled when it is available as the ground truth. An example of how this is used within Allora AI would be if a requested inference is to predict the value of an asset at some point in the future, the ground truth would be the actual value once the time for the prediction has arrived.

Epoch: Within the Allora ecosystem, the epoch length refers to how often a Topic is sampled for inferences for forecast scoring. Typically the term “epoch” in computing refers to the zero point of a common time representation for a computer system (e.g. UNIX time), or in the context of blockchains the term generally represents the length of a consensus round. Due to the risk of confusion with other established epoch definitions, Allora’s use of the term epoch in the context of inference generation should be clearly defined.

Allora Network Participants

Allora’s decentralized nature is made up of several participant categories, each of which performs a specific role in the process of requesting and generating an inference. Allora divides them up into two supercategories:

- Supply Side

- Demand Side

On Allora, collectively Workers, Reputers, and validators are referred to as the supply side. Supply side participants are the participants that contribute computational work and ultimately spend energy to provide the inferences and administrate the network. Consumers on the other hand are considered the demand side. The demand in the Allora case is for inferences, which is the commodity ultimately provided by the network.

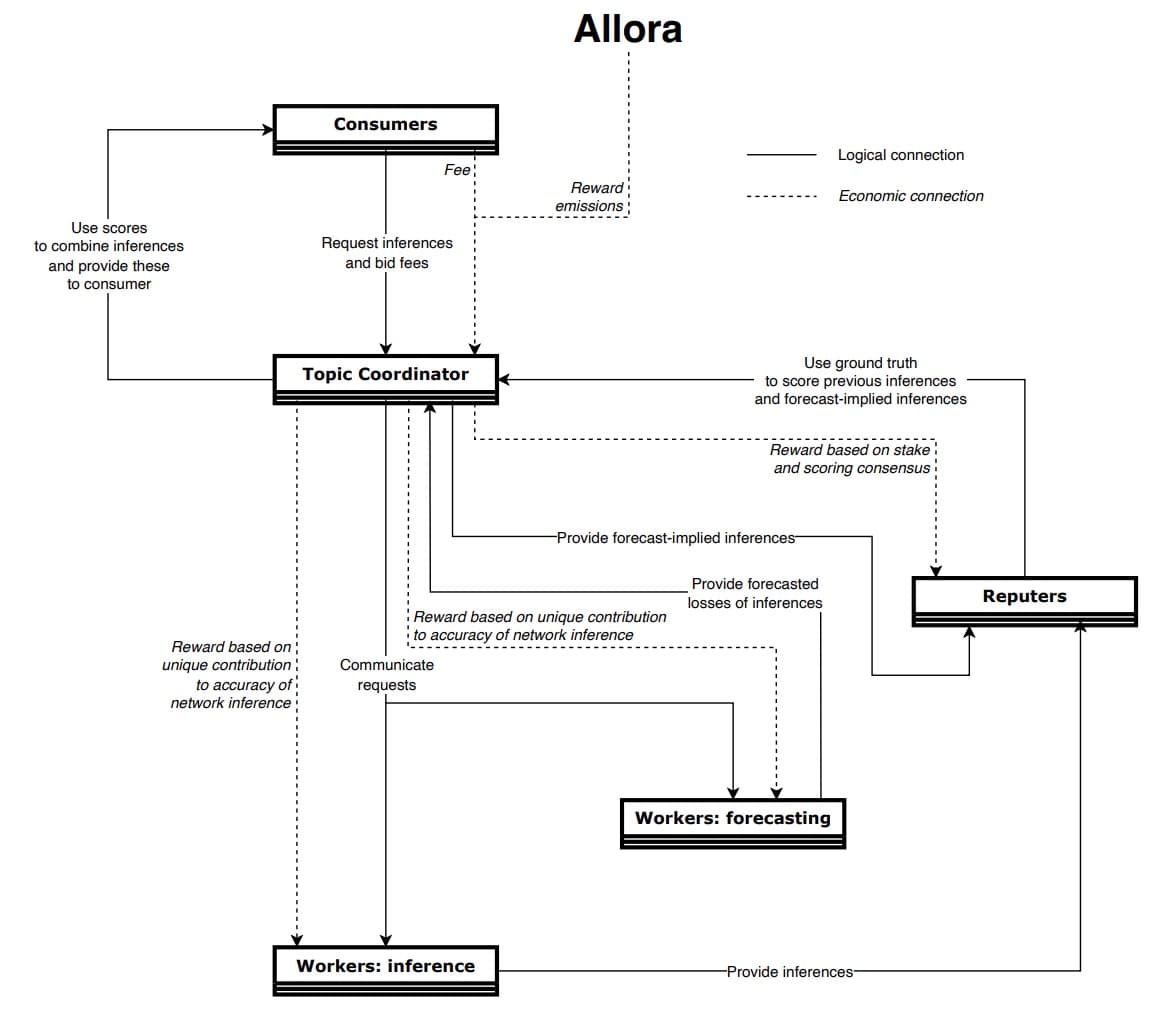

When a Consumer requests an inference from the Allora Network, the request is passed on to a Topic Coordinator. The coordinator distributes the request to several Workers who individually process the request and forecast the quality of each other's responses to the query. Each individual inference is then passed on to the Reputers and the forecasts to the Topic Coordinator.

The Reputers score the inferences and pass them on together with quality scores to the Topic Coordinators who compile the final inference based on accuracy forecasts and reputation weights and return it to the consumer. Finally, the Topic Coordinator assigns rewards according to the accuracy of network inferences and scores.

Now that you're familiar with Allora's different network participants and overall technical architecture, you may be curious to learn more about Allora's continually evolving ecosystem in our third article of this series.

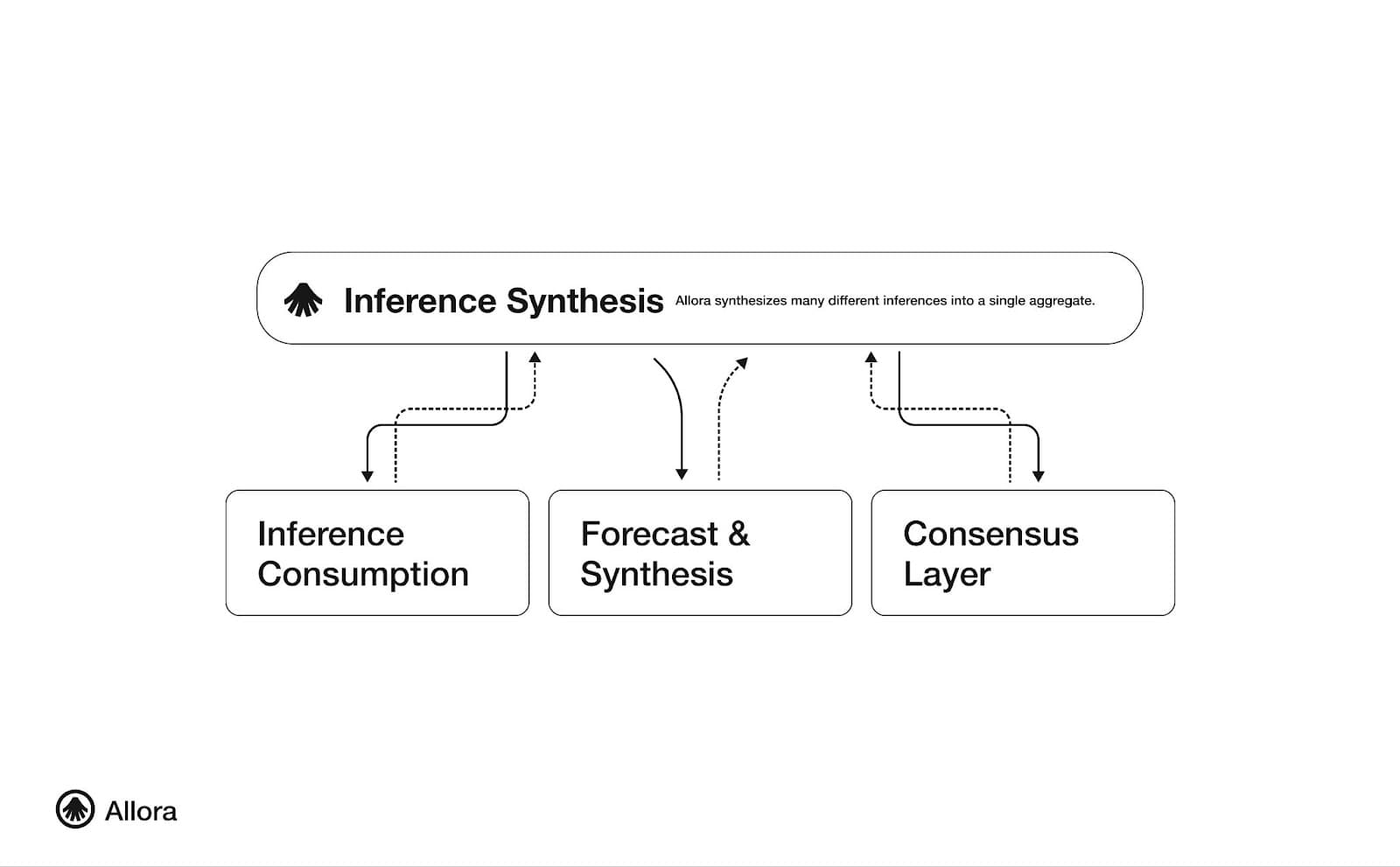

Allora Network Layers

Allora employs a layered structure, designed to provide a framework and marketplace for AI generated inferences. This layered structure separates the Consumers and AI Workers from the administrative consensus mechanism and is highly decentralized. Allora’s three main architectural layers include:

- Inference Consumption: On the inference consumption layer the exchange of requests and inferences between consumers and workers is facilitated. Topic coordination also takes place in this layer. Finally, Reputers are present on this layer. As noted previously, Reputers “score” the inferences produced by Workers, comparing their results to the ground truth (i.e. real world values) when available.

- Forecasting and Synthesis: The forecasting and synthesis layer facilitates the self-improving nature of the network and aggregates replies to inference requests. To carry out the forecasting and synthesis process, forecast Workers score the produced inferences, with the whole body of knowledge being combined by Topic Coordinators, before delivering a final weighted resulting inference to the Consumer. This general workflow is typically conducted as follows (keep in mind that a Worker can work on inferences, on forecasting, or both):

- Consensus: Allora uses a specialized Cosmos appchain to coordinate a large community of participants together to ensure the integrity of the Allora Network and enforce the economics of the system. The consensus layer consists of network validators which help ensure the environment is secure and permissionless at all times. Specifically as it pertains to the network’s rewards structure, the network’s various participant roles accrue calculated rewards as follows:

The Blockchain That Makes Computers Think

Allora is built on Cosmos CometBFT consensus, ensuring permissionless, trustless, and decentralized network access for all involved. The Allora Network utilizes the native ALLO token, while employing a differentiated incentive model for active supply side participants with dependence on effort, stake, or both. This incentive structure is further designed to mitigate the effect of participants gaining disproportionate influence over the system’s reasoning capabilities.

A unique/novel feature of the Allora Network is that the inference generation operates using a Pay-What-You-Want (PWYW) model, with no imposed prices for inferences, neither from the supply side, nor the network itself. Despite this fact, mechanisms are in place to disincentivize Consumers opting for zero-payment. A zero-payment inference request will not generate any rewards for participants. Regardless, this still encourages adoption as even a small payment from a Consumer results in rewards for the supply side participants.

Allora’s differentiated incentive structure is based on what work is performed and what stake a participant has in the system. As noted previously, this is used to maintain a balance between staked interest and performance. The weights given to inferences and used to generate the ultimate composite answer are therefore directed towards accuracy and not by the influence of high stakers. This further prevents an initially good predictor to gain evermore influence in a positive feedback loop, skewing the results despite initial suitable results veering off target later on.

Validators and Reputers

Similar to other PoS networks, Allora employs validators to secure the network whereby validators stake ALLO via a Delegated-Proof-of-Stake (DPoS) system. On Allora, validators maintain consensus on the blockchain through a balanced voting process and are rewarded in proportion to their total network stake, promoting best-interest actions.

Moreover, Allora also employs Reputers which are required to hold a stake similarly to validators. However, their incentive is based on a formula mathematically derived to reward high accuracy. Reputers compare the eventually sourced real world data against the inferences produced and play an integral role in the allocation of rewards to Workers as these rewards are calculated based on accuracy.

Context-awareness Improves Inferences

An AI algorithm making predictions and evaluations based on a given data set will only ever be as good as the data available, but an AI can base its predictions on experience and take unseen, empirically derived, parameters into account much like a human brain would. This lies at the core of why AI is so useful in various scenarios.

An AI network takes this a step further and makes use of several predictions based on different models and generates a combined answer. That said, a simple network that is unaware of this context, can only improve to a certain point. A networked approach will indeed improve the quality of an inference, but if it was possible to make the individual models context-aware, the final outcome could be improved even further.

Allora introduces context-awareness through the algorithms touched on above. By allowing Workers to forecast their own accuracy and communicate these predictions to the network, Allora Reputers can progressively improve their combined accuracy by giving high scores to Workers with high accuracy and rewarding models that provide the best inferences, promoting the evolution of underperforming models. Furthermore, based on the scores of individual inferences, a much more accurate weighting can be assigned to the inferences in the final synthesis of the reply to the consumer.

Resources

The information provided by DAIC, including but not limited to research, analysis, data, or other content, is offered solely for informational purposes and does not constitute investment advice, financial advice, trading advice, or any other type of advice. DAIC does not recommend the purchase, sale, or holding of any cryptocurrency or other investment.